In 1999, the world’s first commercially obtainable coloration video and digicam telephone arrived within the type of the Kyocera VP-210 in Japan. A 12 months after its launch, worries over the fast rise in “up-skirt” voyeurism the telephones enabled unfold rapidly all through the nation, prompting wi-fi carriers to institute a coverage guaranteeing the telephones they supplied would function a loud digicam shutter noise that customers couldn’t disable. The effectiveness of that measure is, to today, up for debate. However the occasion stays a helpful historical past lesson on the widespread adoption of know-how: new instruments make doing all the things simpler, and never simply the great things.

Immediate-based AI artwork mills are actually having their VP-210 second. As soon as applications like Midjourney, DALL E, and Steady Diffusion started proliferating in the summertime of 2022, it was solely a matter of time earlier than customers began creating and iterating on hypersexualized and stereotyped photos of girls to populate social media accounts and promote as NFTs. However AI’s detractors have to keep away from conflating the tech’s inherent potential and worth with its gross misuse — even when these misuses are blatantly sexist.

Likewise, if its proponents need to defend the AI artwork revolution, they should make it clear that they’re able to have this dialog and work towards options. In the event that they don’t, the motion will rightfully lose its credibility, and the maligned tech will face an excellent steeper uphill battle than it already does.

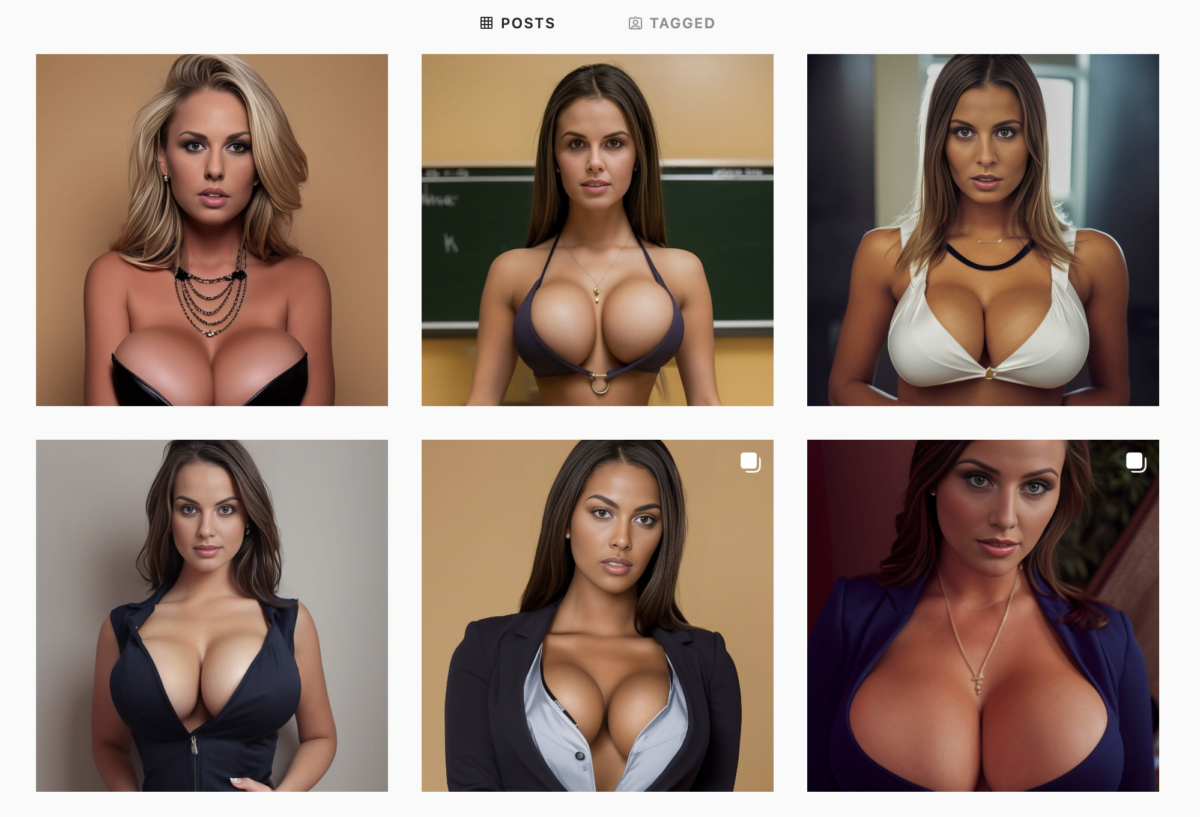

Social media has lengthy held a status for catalyzing low physique satisfaction and vanity in ladies particularly. Misuse of AI artwork instruments has the potential to exacerbate this drawback. A fast search on the preferred social media platforms (for phrases we’re selecting to not reveal right here to keep away from encouraging their use) returns quite a lot of accounts devoted to displaying AI creations depicting photorealistic ladies (those exhibiting males are comparatively few and much between).

The photographs in these accounts vary from tasteful and creative to outright grotesque and pornographic. Most fall into the latter class, presenting portrayals of girls that stray up to now into objectification as to succeed in parody. Whereas we’re together with photos from an assortment of accounts of this nature on this article, we’ve determined to not embody figuring out details about them to keep away from giving a platform to those that, in response to knowledgeable consensus, promote stereotypes and trigger hurt.

Whereas well-intended content material insurance policies from applications like DALL-E and Midjourney forestall customers from together with “grownup content material” and “gore” of their immediate craft, the close to limitless flexibility of language permits customers to avoid a lot of those insurance policies’ limitations with some ease. The truth that these AI methods have inherent biases in opposition to ladies constructed into them has solely made this phenomenon worse. There’s additionally nothing stopping people from constructing and coaching their very own AI fashions, liberating them from such restrictions altogether.

Distilling the objectification of girls

The conversations surrounding the objectification-empowerment dynamic of girls within the style, leisure, and porn industries are complicated, nuanced, and important ones, however all of them revolve across the company and dignity of human beings. What makes the reductive and debased photos of AI-generated ladies really feel so sinister shouldn’t be dissimilar from what makes deepfakes so reprehensible: the tech strips away these pesky ethical hangups relating to consent and character and distills the very essence of sexual objectification into its purest type.

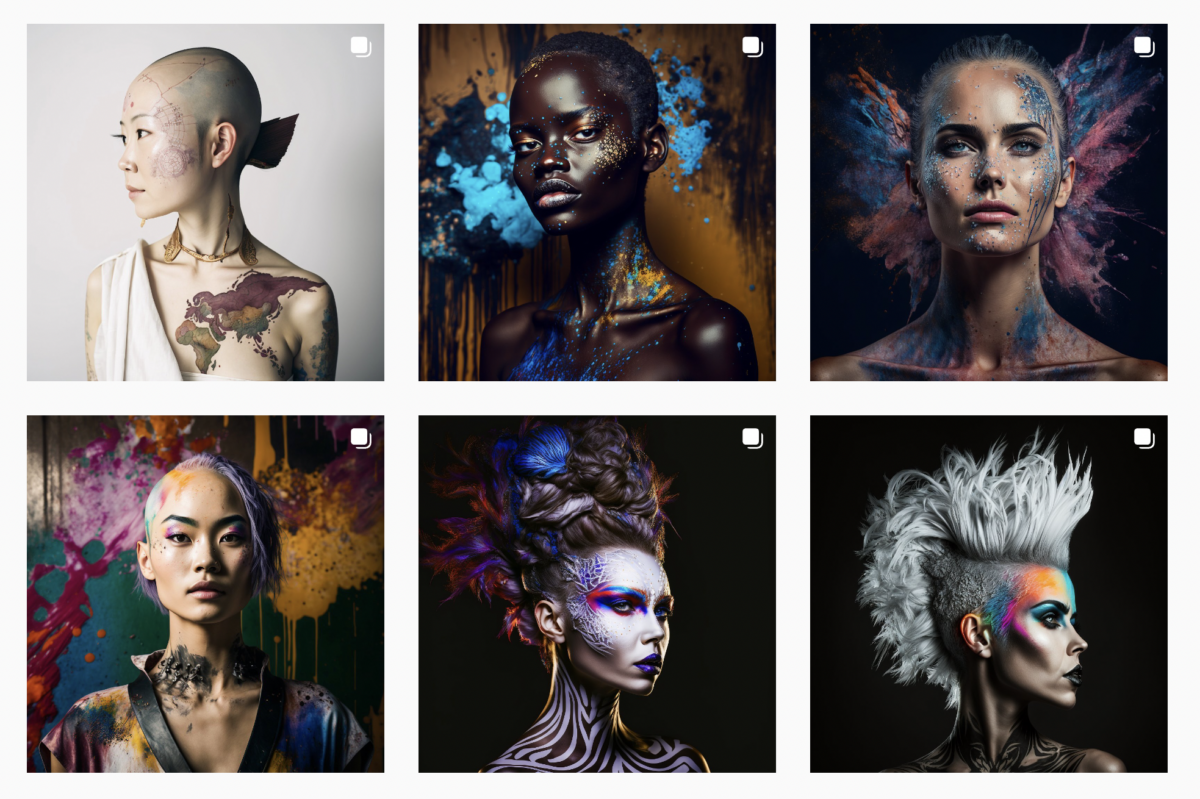

On a broad scale, photos produced by AI artwork instruments that depict folks typically mix the intimate and the alien. Some would argue that that is even part of their attraction, a capability to each highlight and subvert the uncanny valley in really creative and thought-provoking methods.

The identical can’t be mentioned of the AI-generated ladies so typically seen in accounts on Twitter and Instagram. They’re way more unsettling representations, not simply because they’ve emerged from algorithms educated on unknown billions of photos of real-life ladies, however as a result of the pictures are constructed by way of the prompt-based specification of sexually-associated components of girls. The result’s an odd, echoing specter of numerous countenances and our bodies synthesized right into a masquerade of actuality.

Can AI artwork instruments have fun ladies as a substitute?

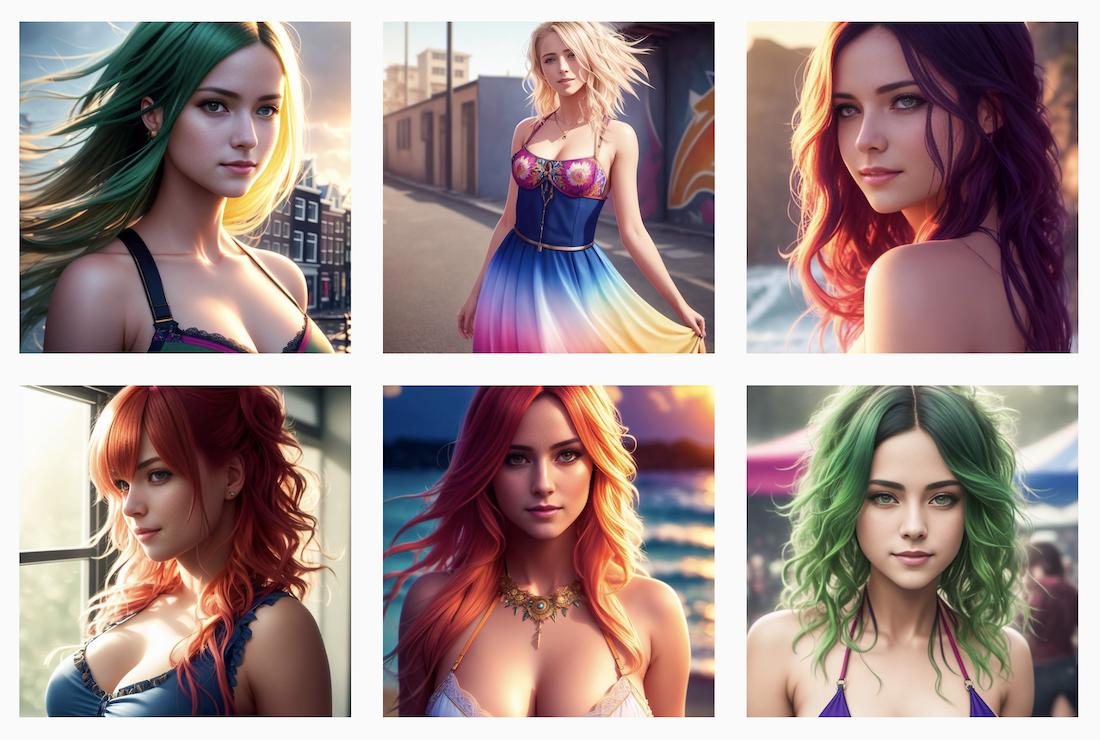

The road between objectifying and empowering blurs rapidly. A number of social media accounts that includes AI-generated content material exist which are operated by ladies, for instance, and declare to have fun them of their range, magnificence, and cultural contexts. Relatively than creating and posting caricatures which were decreased to little greater than their sexual organs, accounts like these are inclined to current ladies from the shoulders up and resemble one thing extra akin to precise human beings. That is progress of a sort, however even on such pages, many of the ladies depicted have strikingly comparable bone buildings and physique varieties to at least one one other, not dissimilar to the so-called “same-face syndrome” phenomenon that has plagued Disney productions for years.

Nonetheless, these variations in diploma matter. An growing variety of NFT collections are starting to make use of AI of their creation, for instance, and many who characterize ladies are arguably doing so in a respectful and modern means. Musess is one such assortment, having constructed its NFTs from AI’s interpretation of artist Eva Adamian’s work of the nude feminine type. The gathering, the mission web site states, was born of the will to create one thing that confirmed the “borderless, inclusive, and unveiled magnificence” of girls.

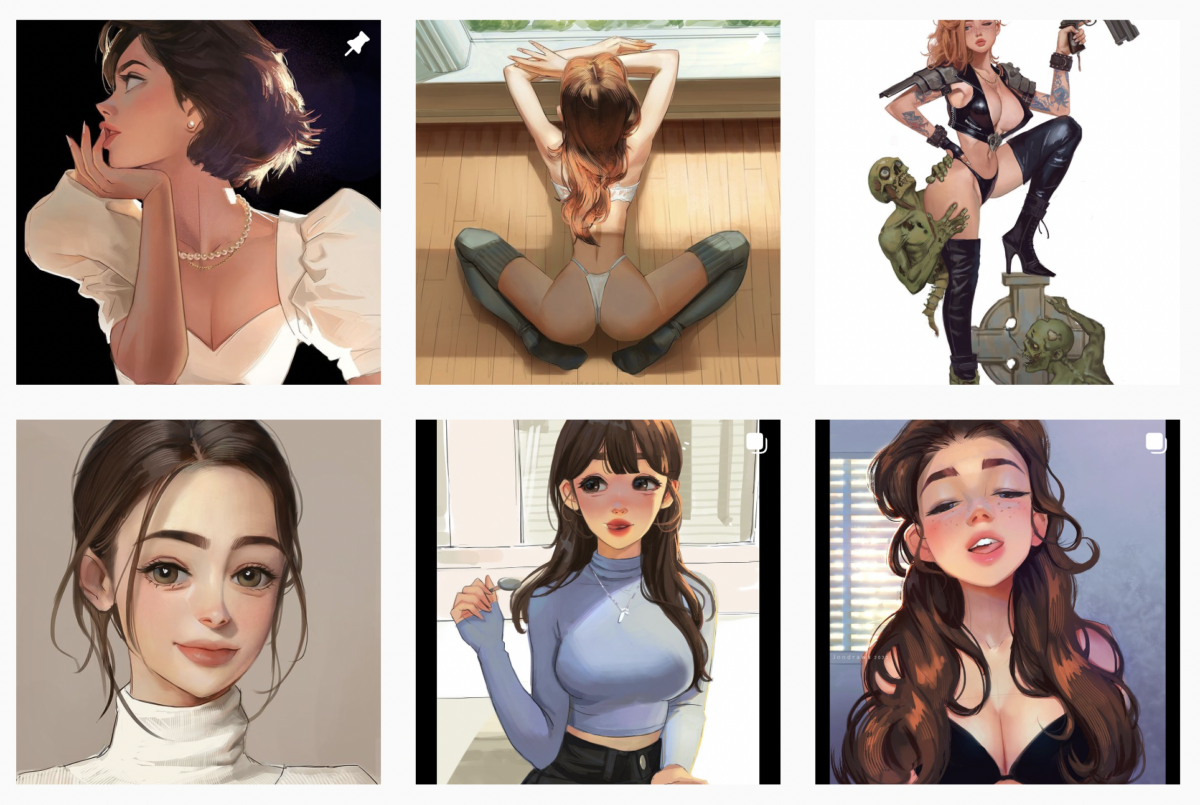

This isn’t a brand new drawback

Girls being objectified in creative media is nothing new; AI has merely made it simpler to realize. That is illustrated by the truth that Instagram’s algorithm rapidly directs you from accounts depicting AI-generated ladies to others of an identical nature — solely the ladies on these pages have been hand drawn by graphic illustrators. Other than the stylistic variations, there may be little to distinguish the 2 in how they understand and current ladies. Simply as AI artwork is artwork in a special expression, objectification is objectification, whatever the medium through which it’s expressed.

AI’s advocates want to steer the cost for change

Each know-how used to create artwork up to now has additionally been utilized to depict ladies in a spectrum starting from dignified to one-dimensional. AI is now the most recent innovation for use on this means. Mockingly, the arrival of the complicated and essential dialog surrounding AI’s position within the objectification of girls is an indication of progress. Basically, the problem behind folks utilizing the tech to scale back ladies to their sexuality alone isn’t any completely different than it at all times has been.

Supporters of AI and AI artwork instruments have to hold this in thoughts. A standard argument amongst proponents asserts that, whereas these instruments are sadly getting used to plagiarize artists’ work and imaginative and prescient, that is no motive to disqualify the know-how outright or deny the great it’s doing on the earth. Absolutely, the dissemination of instruments that creatively empower billions globally should validate their existence, proper?

The reply is that they do. However the different query is that this: Will AI’s supporters embrace the validity of considerations which have much less to do with ethics within the artwork world and extra with human dignity and expressions of sexism?

AI artwork instruments are going to undergo a gauntlet of criticism — each reputable and hole — earlier than they arrive out the opposite finish flush with each different piece of know-how we use in our each day lives. Till then, they should climate the storm, even when that storm features a proliferation of sexist photos made doable as a direct results of the instruments. The Kyocera VP-210 and each telephone that got here after it made it simpler to take images of girls with out their consent. Any cheap particular person can and may really feel disturbed by that reality. However such deplorable conduct represents a poor knock-down argument in opposition to the thought of the digicam telephone itself. As with each know-how, society must discover a strategy to transfer ahead with AI whereas working to attenuate its misuse as a lot as doable. Now’s the time for AI artwork proponents to steer that cost.